Building on the work done and detailed in my previous blog post (Best Practices for Implementing Azure Data Factory) I was tasked by my delightful boss to turn this content into a simple check list of what/why that others could use…. I slightly reluctantly did so. However, I wanted to do something better than simply transcribe the previous blog post into a check list. I therefore decided to breakout the Shell of Power and attempt to automate said check list.

Sure, a check list could be picked up and used by anyone – with answers manually provided by the person doing the inspection of a given ADF resource. But what if there was a way to have the results given to you a plate and inferring things that aren’t always easy to spot via the Data Factory UI.

Supported by friends in the community I had a poke around looking at ways such a PowerShell script could do this for Data Factory, also thinking about a way to objectify an entire ADF instance. Sadly, hitting an existing ADF instance, deployed in Azure wasn’t going to be an option:

- The Get-FactoryV2XXX cmdlets aren’t rich enough in terms of there outputs for the levels of checks I wanted to perform.

- Permissions to production resources can often be an issue, especially if wanting to run random bits of PowerShell against a business critical environment.

- The checks needed to be done offline with the feedback given to a human to inform next steps. This is not intended to be a hard pass/fail test.

- Its not always a given that a Data Factory instance will be connected to a source controlled repository.

- Downloading a typical Az Resource template for an existing Data Factory isn’t yet supported using Get-AzResource.

- Via the Azure Portal UI, if viewing a Resource Group and trying to get an automation generated ARM template, Data Factory doesn’t support this either, seen below.

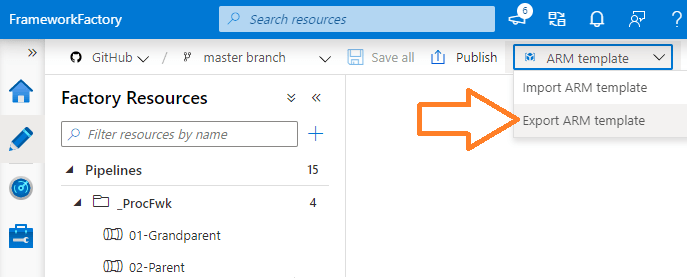

With the above frustrations in mind my current approach is to use the ARM template that you can manually export from the Data Factory developer UI. This can be downloaded and then used locally with PowerShell.

Once downloaded and unzipped, armed with this JSON file (arm_template.json) I could begin with everything I needed in a single place to query the target Data Factory export.

As a starting point for this script, I’ve created a set of 21 logic tests/checks using PowerShell to return details about the Data Factory ARM template. This includes the following:

- Pipeline(s) without any triggers attached. Directly or indirectly.

- Pipeline(s) with an impossible AND/OR activity execution chain.

- Pipeline(s) without a description value.

- Pipeline(s) not organised into folders.

- Pipeline(s) without annotations.

- Data Flow(s) without a description value.

- Activitie(s) with timeout values still set to the service default value of 7 days.

- Activitie(s) without a description value.

- Activitie(s) ForEach iteration without a batch count value set.

- Activitie(s) ForEach iteration with a batch count size that is less than the service maximum.

- Linked Service(s) not using Azure Key Vault to store credentials.

- Linked Service(s) not used by any other resource.

- Linked Service(s) without a description value.

- Linked Service(s) without annotations.

- Dataset(s) not used by any other resource.

- Dataset(s) without a description value.

- Dataset(s) not organised into folders.

- Dataset(s) without annotations.

- Trigger(s) not used by any other resource.

- Trigger(s) without a description value.

- Trigger(s) without annotations.

Circa 1000+ lines of PowerShell later…

Each check as a severity rating (based on my experience) as in most cases there isn’t anything life threatening here. Its purely informational and based on the assumption that a complete round of integration testing has already been done for your Data Factory.

The intention of the script is to improve on the basics and add quality to a development that goes beyond simple functionality. For example, a Linked Service may operate perfectly. But, if using Key Vault to handle the credentials, then that’s a better practice we should be working towards.

Ok, enough preaching!

Here is an example of the PowerShell script v0.1 output from one of my bosses Data Factory instances, certainly not one of mine!! :-p

Code in Blog Supporting Content GitHub repository.![]() https://github.com/mrpaulandrew/BlogSupportingContent

https://github.com/mrpaulandrew/BlogSupportingContent

I’d be interested to know your thoughts on this and if there are any other checks you’d like adding. Not everything from my previous best practices blog can be checked via a single ARM template, but every little helps 🙂

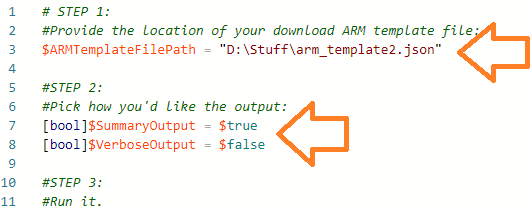

In terms of using the script, simply provide your unzipped ARM template file path, specify your preferred output table (summarised, verbose or both) and hit go.

Direct link to script file in GitHub.

Long term I’ll harden this and wrap it up in a PowerShell module, maybe with some other Azure.DataFactory.Tools *cough* Kamil.

Many thanks for reading.

Interesting tool Paul, thanks for sharing! I can see how this can help give a starting point for identifying where to improve, and would be most effective running against a Production or Test factory, I know my development factories are full of things that aren’t best practices.

One thing I am not really on board with is that pipelines without triggers are a medium severity risk – We use the procfwk package extensively and the only pipeline we have with a trigger is the Grandparent – everything else is managed via metadata. I don’t see this as a problem at all!

On that note, have you planned to do any more updates to procfwk? It popped up on my radar at the precise moment that I needed it for a new implementation, and covered 95% of my requirements – I’d be very interested in any new development in that arena. We are going live with the orchestration system January 1st!

LikeLike

Hey James, thanks for the feedback.

Yes, of course, using Workers triggered and bootstrapped by procfwk isn’t an issue. I did wonder how to include such a condition in the PowerShell logic. Are you using my framework, if so, gold star, move on :-p

I did a new small release of the procfwk yesterday. Now at v1.9.1. Documentation in progress here: procfwk.com

Feel free to request features via the GitHub issues.

My next big release will be to support Synapse pipelines.

Cheers

LikeLike

Hi Paul, very useful tool (as always). I just ran a quick test and have a couple thoughts:

1) with the Linked Services, we use Managed Identity to validate against the resource access. This should be secure enough? Your utility is currently giving an error: “Not using Key Vault to store credentials”

2) I can totally see using this script, I am just wondering if some of the checks may be duplicating the logic in the stored procedure CheckMetaDataIntegrity?

Thanks for this, will definitely run more verifications against it.

LikeLike

Hey, yes, number 1, great feedback, thanks. I’ll extend the check logic to include MSI’s.

Number 2, the metadata integrity checks in the procfwk db are very separate from the intention of this discrete bit of PowerShell so not really duplicating anything. They are for different things.

Cheers

LikeLike

Hello there Paul! Great initiative, thanks for sharing. To contribute from ADF security perspective, this repo might help for some security use case ideas, checks. Currently working on Azure Sentinel KQL queries for ADF security. Not sure if it can be integrated to your checks. Sharing it for what it’s worth. Hoping that it can contribute somehow.

https://github.com/powerofknowing/adfsec/blob/main/adf-security-usecase.md

LikeLike

Thanks I’ll have a look.

LikeLike

Hi Paul, this is a great script! I have two questions about it:

1. Is there a newer version of this script available somewhere else than your BlogSupportingContent repository?

2. What is the license associated with it?

LikeLike

Will you please post your PowerShell script? The link isn’t working

LikeLike