Databricks vs Synapse Analytics

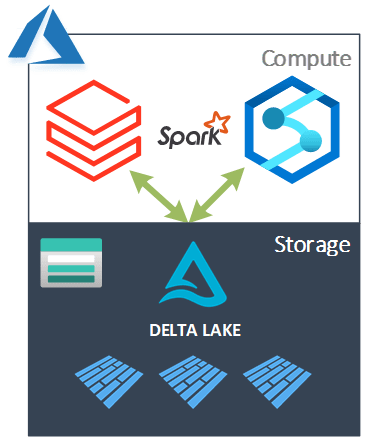

As an architect I often get challenged by customers on different approach’s to a data transformation solutions, mainly because they are concerned about locking themselves into a particular technology, resource or vendor. One example of this is using a Delta Lake to deliver an Azure based warehousing/analytics platform.

Given this context, in this blog post I want to explore the Delta technology and how to overcome said concern.

As a side note; the critic in me might blame our experiences of using Microsoft’s Data Lake Analytics offering for this new found anxiety regarding resource lock-in. But let’s not go there!

A Quick History Lesson

Databricks Delta was born and announced at the Spark +AI Summit back in November 2017 by Databricks. Reminder of the announcement here. For data engineers this was certainly an exciting capability to have available in our toolkit for data transformation workloads. But, the above challenge about becoming locked into using Databricks quickly surfaced as a concern. Sure, Databricks is available as a service on most cloud platforms, not just Azure, but still, its understandable that in 2017 we might not want to have Delta tables for everything because the technology appeared as a propriety Databricks capability.

Moving on. This concern was initially addressed when Databricks further announced the release of Delta Lake v0.3.0 in August 2019. Reminder of the announcement here. This meant we now had an open source version of Delta that can be used with any Spark implementation, assuming you install it. Of course, this isn’t as good as the Databricks premium implementation over Spark, but its still a great technology to have available on any Spark cluster.

Then, the final hurdle in addressing this concern came in Azure when Microsoft announced Synapse Analytics in November 2019 and specifically the Apache Spark Compute pools available as part of the resource. These managed compute clusters come pre-installed with the Delta Lake libraries and allow easy interaction with Delta tables via the Synapse Notebooks and workspace.

History lesson over…

Now the question in the heading of this blog should be incredibly pertinent to all solution/technical leads delivering an Azure based Delta Lakehouse.

Question: How Interchangeable Are Delta Tables Between Azure Databricks and Azure Synapse Analytics?

Answer: Very interchangeable! 🙂

Or, to ask the question another way…

Question: Can we use (read/write) Delta tables created in Azure Databricks with Azure Synapse Analytics – Spark Compute Pools and vice versa?

Answer: Yes!

Let’s go a little deeper and explore some more explicit technical table scenario’s.

Testing

Full credit to William Wright for testing these scenario’s. Also, look out for Will’s own blog that he’ll be starting very soon! 😉

| Scenario | Result | Comments |

| Write DELTA in Databricks, read in Synapse | ||

| Write partitioned DELTA in Databricks, read in Synapse | ||

| Write DELTA in Synapse, read in Databricks | ||

| Write partitioned DELTA in Synapse, read in Databricks | ||

| In Synapse, merge into DELTA created by Databricks then read in Synapse | Using Python syntax, SQL MERGE not yet supported. | |

| In Databricks, merge into DELTA created by Synapse then read in Databricks | ||

| Optimize in Synapse | Supported in the intellisense, not when executed. | |

| Vacuum in Synapse | ||

| Read data from both Databricks and Synapse at the same time | ||

| Write data from both Databricks and Synapse at the same time (Different Data) | Databricks completed.

Synapse failed.Reason error details: |

|

| Write data from both Databricks and Synapse at the same time (Same Data) | Both completed without error. However, executing both at exactly the same time wasn’t possible manually. |

Test Environment

A few details about the versions of the services used:

Synapse

- Apache Spark Version 2.4

- Python Version 3.6.1

- Delta Lake Version 0.6.1

Databricks

- Runtime Version 7.4

Conclusion

In my opinion; Delta is and should be the standard now used for delivering data warehousing/analytics capabilities within an Azure Data Platform solution. The technology is, for the most part, open source and allows the decoupling of storage from a choice of compute.

I hope this blog was helpful.

Many thanks for reading.

Hey Paul, great article!!

I’ve had many clients asking to have a delta lake built with synapse spark pools, but with the ability to read the tables from the on-demand sql pool. I’ve tested and tested but it seems that the sql part of synapse is only able to read parquet at the moment, and it is not easy to feed an analysis services model from spark. How do you address that issue?

LikeLike

Hey, yes correct. Delta tables aren’t yet supported via SQL On Demand. Not sure what Microsoft have planned here. I don’t have a good work around to this situation yet. Thanks

LikeLike

Is there a separate post which explains how to explore delta tables created in databricks using Synapse?

also, interested in seeing testing methods or steps used by William Wright.

are they documented anywhere?

LikeLike

Hello, this was a great informative post. Also, what was the speed difference for similar size cluster?

LikeLike

In Databricks you have to run only once command for compaction and vacuum and rest is assured. how I can do the same in Synapse Spark Pool???

LikeLike