There were many great announcements to come out of the Microsoft Ignite 2018 conference, but the main one for me was the introduction of Azure Data Factory Data Flow. As a business intelligence person with many years experience working with SSIS packages this new feature is very exciting! Since I first saw the mock up screens at the MVP Summit back in March 2018 I’ve been dying to talk about it. Well, despite it still being in private preview there has been plenty of public chatter, so, here’s a quick introduction to the feature from me.

There were many great announcements to come out of the Microsoft Ignite 2018 conference, but the main one for me was the introduction of Azure Data Factory Data Flow. As a business intelligence person with many years experience working with SSIS packages this new feature is very exciting! Since I first saw the mock up screens at the MVP Summit back in March 2018 I’ve been dying to talk about it. Well, despite it still being in private preview there has been plenty of public chatter, so, here’s a quick introduction to the feature from me.

What is it?

Azure Data Factory Data Flow or ADF-DF (as it shall now be known) is a cloud native graphical data transformation tool that sits within our Azure Data Factory platform as a service product. What’s more, ADF-DF can be considered as a firm Azure equivalent for our on premises SSIS package data flow engine. Is Data Factory SSIS in the cloud? Has been a long standing question, which with this latest addition to the service I’m now comfortable saying yes is the answer. Our data factory pipelines offer dynamic control flow behaviour. Data flow offers transformations to manipulate our datasets and pipeline triggers offer SQL Agent scheduling behaviour as well as event based execution.

Given the above I think its time to update the question to; what more can an ETL developer ask for in Azure? 🙂

Going a little deeper, ADF-DF offers a rich drag and drop user interface, seen above, to build data transformation processes in Azure with currently a suite of 13 different dataset manipulation operations.

- Branch

- Join

- Conditional Split

- Union

- Lookup

- Derived Column

- Aggregate

- Surrogate Key

- Exists

- Select

- Filter

- Sort

- Extend

I assume the product team are planning to increase functionality, but for now this is a pretty good start.

Why is Data Flow exciting?

Ok, you might think this is an odd subheading for an introduction post, it is. But, is Data Flow exciting?… Yes, it sure is. The reason being is because of how the new feature executes the transformation pipelines built. *drum roll* Azure Data Factory uses Azure DataBricks as the compute for the data transformations built.

You are probably already aware that within an ADF pipeline we have activities to invoke Azure Databricks as a control flow component, seen on the right. These activities can call a Python file, a Juptyer Notebook or using some compiled Scala in a Jar file. These three options all require us to write either Python or Scala to process our data. Which is fine, but not necessarily fine for someone more at home in an SSIS package environment with C# as the normal means available to extend packages.

You are probably already aware that within an ADF pipeline we have activities to invoke Azure Databricks as a control flow component, seen on the right. These activities can call a Python file, a Juptyer Notebook or using some compiled Scala in a Jar file. These three options all require us to write either Python or Scala to process our data. Which is fine, but not necessarily fine for someone more at home in an SSIS package environment with C# as the normal means available to extend packages.

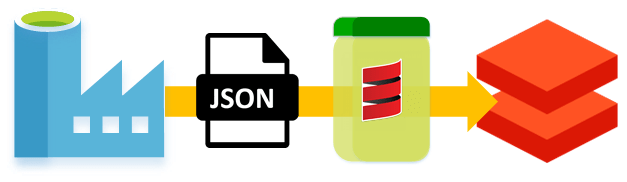

Well with Data Flow Microsoft have done something really special. The JSON output from the graphic ADF-DF user interface is used to write the Scala for us! This then gets compiled into the Jar file and passed to Azure Databricks to execute as a job on a given cluster (defined via ADF linked services as normal).

SSIS friends you don’t need to retrain as Python and/or Scala developers 🙂

Ambiguous Terminology

It seems we have no original names anymore. So please be aware of the following conversational traps that I have already fell into several times.

- Data Flow is now also a feature available within the Power BI suite. When talking about Data Flow and Data Flow from two different services this can get really confusing. Be aware.

- We now have a Lookup activity within our ADF pipelines as well as a Lookup transformation within the new Data Flow feature (just like SSIS). Make sure you understand the difference and get the context correct.

There is obviously a lot more to share regarding Azure Data Factory Data Flow, so watch this space. I’ll line up some more posts as the new feature is revealed and becomes publicly available.

Many thanks for reading.

Hi Paul, I attended your session on this last year at Data Relay. I was wondering if this feature is still in public preview or whether it is generally available now? I am struggling to find out.

Thanks

LikeLike

Still in preview at the moment. Hopefully won’t be long now 🙂

LikeLike

These examples always start with such beautifully organised text files or JSON and can be combined as-is. When the original data sets are text files from multiple providers, that may need to be unzipped, or decrypted, are character delimited or fixed width, header rows need to be skipped or added in, column values need to be joined on several “mapping tables” depending on whether it is a currency code, or stock tickers, or other attributes, some settings depend on the file, some individual to each row in the file… the list goes on.

Does this stage have a place in the Azure stack?

LikeLike

Hey Geraint, sure, but maybe a different service is required until Data Flows matures to handle greater complexity. Thanks

LikeLike