I’ve been playing around with Azure Synapse Analytics for a while now exploring the preview features and trying to find a meaningful use case for the ‘single pane of glass’ capabilities. In this post I’m exploring one possible option/idea for creating a very simple self service approach to dataset ingestion and consumption. Full disclosure, the below is far from technical perfection for lots of reasons, I mainly wanted to put something out there as an idea and use it to maybe start a conversation.

So, here’s what I did in picture form first and all within the Azure Synapse Analytics workspace:

Source – data housed on an Azure Virtual Machine, which in theory could be any business users PC.

Ingestion – firstly, this is handled by a Hosted Integration Runtime with files copied and converted depending on there type. Initially, I did this for CSV, TXT and XLS files, but of course the pipeline could easily be extended to include others. Secondly, once landed in Synapse connected storage a Spark.Net (C#) Notebook infers the given dataset schema and creates it as an external table within the SQL On-Demand compute pool.

Consume – using a Power BI workbook published and connected to by Synapse Workspace I was able to work with the raw/simple datasets.

The key to this approach has to be the common metadata model that Synapse uses for all datasets registered/created as a tables via a SQL Pool, via Hive or the SQL On-Demand compute. Having this structure and endpoint to connect to makes exploring anything in the Data Lake really easy and the following just automates that handling.

Lets look more at the internals of the process:

Ingestion

The specifics of the data ingestion uses a Metadata Activity within a Synapse Orchestrate pipelines to firstly query and upload files from the ‘local’ source directory. Expressions in the pipeline dynamically set the target folder path within the Data Lake and Switch between different Copy activities depending on the file extension. Expression fun for that switch case condition below:

@substring(item().name,add(indexof(item().name,'.'),1),sub(length(item().name),add(indexof(item().name,'.'),1)))

Side note; this type of generic copy and ingest pattern using a Switch activity is definitely something worth exploring on its own if your a fan of dynamic pipelines.

The complete pipeline activity chain can be seen below.

Consumption

To consume the data in Power BI using the SQL On-Demand compute I used the Apache Spark pools with a Notebook written in C# to firstly read the ingested CSV files (inferring dataset schemas), write out a Parquet version of the dataset, then create a table from the Parquet file (which could be done and queried in SQL as well), but I stuck to the C# data frame SaveAsTable method.

A visual of the Notebook I created can be seen below, the actual code is in my GitHub repository that I manually exported given Synapse still doesn’t have source code functionality.

Once done you can browse the SQL On-Demand endpoint via SSMS to view the external tables created, or via the Synapse Workspace data panel if you prefer. Either way, the table have an external resource connecting them to the underlying Parquet file.

Once done you can browse the SQL On-Demand endpoint via SSMS to view the external tables created, or via the Synapse Workspace data panel if you prefer. Either way, the table have an external resource connecting them to the underlying Parquet file.

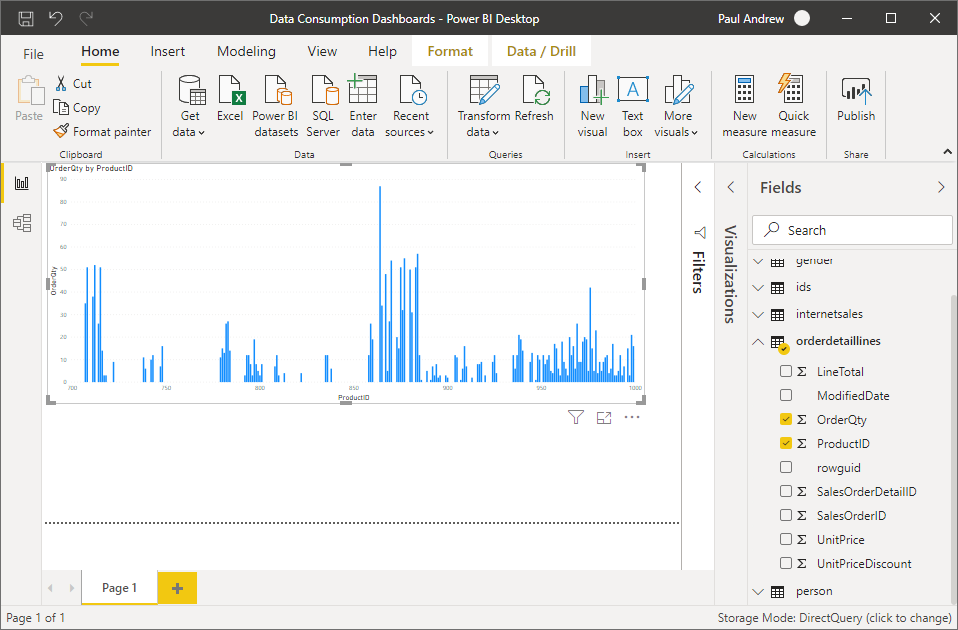

Finally, with my Power BI Workspace connected to Synapse I created and published a Power BI desktop file including the connection string to the SQL On-Demand endpoint. Then I created some amazing, mind blowing visuals on a dashboard, not really, I don’t do visuals, but you can 🙂

Conclusion

So, what was the point of all this Paul?

Well, really it was to explore and automate a possible self service pattern that takes advantage of the Synapse SQL On-Demand compute. Plus, play around some more with C# in a Synapse Notebook. Then, in theory this pipeline could be scheduled to run hourly allowing business users to upload data to the Data Lake on an ad-hoc basis, consume the data almost straight away and share dashboards using the centrally backed Synapse compute.

What do you think? Is this a pattern you could make use of?

In a later post I might extend the approach to include some merge handling for datasets that have already been ingested if the business user wanted to upload a new version of the file.

Code in Blog Supporting Content GitHub repository.![]() https://github.com/mrpaulandrew/BlogSupportingContent

https://github.com/mrpaulandrew/BlogSupportingContent

Many thanks for reading.