Code Project Overview

![]() This open source code project delivers a simple metadata driven processing framework for Azure Data Factory (ADF). The framework is made possible by coupling ADF with an Azure SQL Database that houses execution stage and pipeline information that is later called using an Azure Functions App. The parent/child metadata structure firstly allows stages of dependencies to be executed in sequence. Then secondly, all pipelines within a stage to be executed in parallel offering scaled out control flows where no inter-dependencies exist.

This open source code project delivers a simple metadata driven processing framework for Azure Data Factory (ADF). The framework is made possible by coupling ADF with an Azure SQL Database that houses execution stage and pipeline information that is later called using an Azure Functions App. The parent/child metadata structure firstly allows stages of dependencies to be executed in sequence. Then secondly, all pipelines within a stage to be executed in parallel offering scaled out control flows where no inter-dependencies exist.

The framework is designed to integrate with any existing Data Factory solution by making the lowest level executor a stand alone Worker pipeline that is wrapped in a higher level of controlled (sequential) dependencies. This level of abstraction means operationally nothing about the monitoring of orchestration processes is hidden in multiple levels of dynamic activity calls. Instead, everything from the processing pipeline doing the work (the Worker) can be inspected using out-of-the-box ADF features.

This framework can also be used in any Azure Tenant and allow the creation of complex control flows across multiple Data Factory resources by connecting Service Principal details through metadata to targeted Subscriptions > Resource Groups > Data Factory’s and Pipelines, this offers very granular administration over data processing components in a given environment.

Framework Key Features

- Granular metadata control.

- Metadata integrity checking.

- Global properties.

- Dependency handling.

- Execution restart-ability.

- Parallel execution.

- Full execution and error logs.

- Operational dashboards.

- Low cost orchestration.

- Disconnection between framework and Worker pipelines.

- Cross Data Factory control flows.

- Pipeline parameter support.

- Simple troubleshooting.

- Easy deployment.

- Email alerting.

ADF.procfwk Resources

|

Blogs | mrpaulandrew.com/ADF.procfwk |

| GitHub | github.com/mrpaulandrew/ADF.procfwk | |

|

#ADFprocfwk | |

| Vlogs | youtube.com/mrpaulandrew |

Thank you for visiting, details on the latest framework release can be found below.

Version 1.7 of ADF.procfwk is ready!

Release Overview

![]() The primary goal of this release was to implement email alerting within the existing processing framework and using existing metadata driven practices to deliver this in an easy to control, flexible and granular way. That said, the following statements have been met in terms of alerting capabilities and design.

The primary goal of this release was to implement email alerting within the existing processing framework and using existing metadata driven practices to deliver this in an easy to control, flexible and granular way. That said, the following statements have been met in terms of alerting capabilities and design.

- Email alerting is a completely optional feature that can be turned on/off via the main database properties table.

- Recipient email addresses are stored as metadata within the framework database.

- Recipients can be enabled/disabled as required.

- Recipients can subscribe to one or many Worker pipelines to receive alerts.

- Recipients can optionally be included in the email message as the main receiver (To), copied (CC) or blind copied (BCC).

- Pipeline alert subscriptions (links) can be enabled/disabled as required.

- Pipeline alert subscriptions (links) can be setup for all, or a particular Worker pipelines outcome using the pipeline result status from the current execution table. For example; Success, Failed, Cancelled etc.

- Alerting content can be customised using a central email body template with Data Factory and Pipeline content injected into the underlying HTML message at runtime.

- SMTP details are configured via the Azure Function ‘SendEmail’ application settings.

- Email traffic is reduced to only a single message sent per Worker pipeline. Not one message per recipient.

Finally, the key principal was to implement this feature in such a way that meant very little changes are needed to the current core Data Factory pipelines and to apply just the right amount of effort in the metadata layer to avoid lots of new Activities and expressions.

Database Changes

Database Changes

To support the new email alerting capabilities several database changes have been made. The main reasons for this was to absorb as much work as possible into the metadata database without needing to burden Data Factory with lots of additional steps in the framework core pipelines (Grandparent > Infant).

Properties

Firstly, let’s cover off the two new properties added to the processing framework that are used to support other metadata operations for alerting. There is good reason for starting with these.

- UseFrameworkEmailAlerting – As stated above, the alerting features of the framework are completely optional. You may already have a different way of producing alerts for your Data Factory pipelines. If so, simply set this property value to ‘0’ and alerting will be disabled for your processing framework. You can also skip most of this blog post 😉

- EmailAlertBodyTemplate – This property value, as the names suggests, is used to house the basic HTML template that at runtime is used as the email body. The HTML tags provide some basic formatting for the processing details to be injected in a user friendly way. At runtime the actual Worker pipeline details are added to the template by replacing the triple hashed parts of the overall string. Eg:

SELECT REPLACE(@EmailBody,'##PipelineName###',[PipelineName]).

As with all other properties these are added to the database via the Post Deployment scripts within the data tools project using the stored procedure [procfwk].[AddProperty].

Tables

The latest database diagram below shows shows the new tables used to support the email alerting. This image is also available in the repository here.

The narrative and rational for these three new tables:

[procfwk].[Recipients]– Here names and email addresses are stored and coupled with attributeMessagePreferenceto drive To/CC/BCC behaviour. Table constraints provide validation and ensure unique values are entered for addresses + preferences. By default recipients will have a message preference of ‘To’.[procfwk].[AlertOutcomes]– This table is used to support the Worker pipeline outcome, allowing recipients to subscribe to any combination of preferred status values. I toyed with the idea of handling this with a further set of normalised tables before concluding that using a bit mask was the simplest way to achieve the required result. The primary key of the table is used to drive the bit position and uses a IDENTITY seeded to zero. Then the computedBitValueattribute informs which status is requested for comparison using the & operator in the subsequent stored procedures. Finally, to ensure integrity in the metadata, a unique constraint has been added to the pipeline status value.[procfwk].[PipelineAlertLink]– As the name suggests this table houses the foreign keys used to drive the alerts to be sent for a given recipient, pipeline and accompanied by the outcome bit value for the given subscription requirements. This is clearer in the database diagram above.

The combination of this metadata means that a recipient could, for example….

- Be copied on Success alerts for some Worker pipelines…

- Receive Failed alerts as the primary (To) recipient for other Worker pipelines…

- Be blind copied (BCC) on all cancelled Worker pipelines.

Or, some other completely flexible setup. I recommend using the code snippets added to the handy Azure Data Studio Notebook when adding your own metadata combinations.

Procedures

I’ve broken down the stored procedure into two further categories, framework support procedures (called by Data Factory) and helper procedures that could be used adhoc when setting up your framework database.

Framework Support:

[procfwk].[CheckForEmailAlerts]– This procedure perform two important checks against the metadata. Firstly, getting the main database property value to establish if the email alerting feature is enabled. Then inspecting the table[procfwk].[PipelineAlertLink]to establish if any alert subscriptions exist for the current Worker pipeline ID. The result will be a Boolean 1 or 0 to inform what Data Factory does next.[procfwk].[GetEmailAlertParts]– This procedure is key to the alerting process as it does the majority of the work in gathering recipients, creating the email subject, email body and setting the message importance. The returned attributes are aligned and aliased exactly as they are required for the request body of the ‘SendEmail’ Function detailed below.

Helpers:

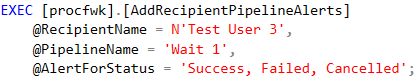

[procfwk].[AddRecipientPipelineAlerts]– Assuming a recipient email address record has already been added this procedure creates the alert link between the recipient record and the Worker pipeline(s). Default parameters can be used to add the link for either a specific pipeline or all pipelines. Then either all pipeline status values or just a subset. A comma separated string can be used to translate the named values into the correct bitmask value. Example below:

This code snippet can also be found in the project post deployment scripts.

[procfwk].[DeleteRecipientAlerts]– This procedure provides the opposite affect to the ‘add’ procedure above. It also supports soft deletions using the link table and recipients table ‘Enabled’ attributes to UPDATE them to ‘0’ if a hard DELETE isn’t yet required. Soft deletions are the default parameter behaviour when executing the procedure.

Function Changes

Function Changes

In line with the primary reason for this release, the only changes in the Azure Functions App relate to the ‘SendEmail’ Function. As follows:

Send Email

Building on the details of this Function shared in the previous release of the procfwk (v1.6), the following changes now apply.

- BCC recipients have been added as an optional parameter that can now be passed in the Function request body.

- To recipients are no longer mandatory in the Function body request.

- Additional log information added to output the number of recipients included as To/CC/BCC for the send email operation.

- Request body validation improved to check for minimum values before establishing the mail client and message. The request must have:

- Either To, CC or BCC recipients. At least one type of recipient is needed.

- An email subject.

- An email body.

An example of a complete request body for this Function is shown below.

{

"emailRecipients": "test.user1@adfprocfwk.com",

"emailCcRecipients": "test.user2@adfprocfwk.com",

"emailBccRecipients": "test.user3@adfprocfwk.com",

"emailSubject": "ADFprocfwk Alert: Wait 10 - Success",

"emailBody": "Wait 10 - Success",

"emailImportance": "Low"

}

Data Factory Changes

Data Factory Changes

To support the new email alerting capabilities the Child pipeline has been extended to include the call to the ‘SendEmail’ Azure Function. This was included in its basic form in the previous release, but has now been fully implemented and improved as detailed in the Function Changes section above.

Snippet of the latest Activity chain below. The complete v1.7 Activity chain picture is available in PowerPoint here.

The new Activities perform the following operations in the context of the framework alerting and metadata details already established in the Database Changes section.

- Lookup (Check For Alerts) – As detailed in the Database Changes section a new property has been included to optionally enable or disable the framework email alerting. This lookup hits the stored procedure

[procfwk].[CheckForEmailAlerts]to assert if alerting is firstly enabled and if there are any recipients associated with the current Worker pipeline ID being executed. - If Condition (Send Alerts) – To avoid unnecessary work all email sending is wrapped in this If Condition activity which has its Boolean value supplied by the previous ‘Check For Alerts’ Lookup. If the true condition is meant by the metadata, then:

- Lookup (Get Email Parts) – This lookup calls the stored procedure

[procfwk].[GetEmailAlertParts]and returns a single row of values that make up the email content to be sent. - Azure Function (Send Email) – Finally, an Azure Function Activity is used to hit the ‘SendEmail’ code with the body of the request supplied completely by the previous lookup and corresponding stored procedure. As the Function is part of the existing Framework Functions App the same Linked Service connection is used to authenticate.

- Lookup (Get Email Parts) – This lookup calls the stored procedure

Bug Fixes

A few bugs to call out in this release following wider feedback and adoption of the framework from our excellent internal/external communities.

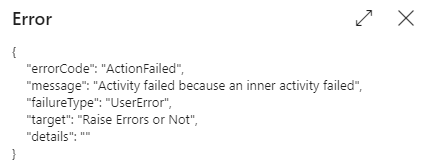

- The view

[procfwk].[LastExecution]has had a where clause added to exclude records without end date/time values. This allows unique values to be joined in the Power BI reporting model which previously caused in error in the Overview Dashboard visuals.  When an Activity failure occurs the Error Code is returned which the framework captures in the

When an Activity failure occurs the Error Code is returned which the framework captures in the [procfwk].[ErrorLog]table. I had wrongly expected that this Error Code would always be a numeric value given this Microsoft Docs page. However, if the Activity error comes from within a nested Activity (eg. If Condition, ForEach, Until). The parent Activity also returns an Error Code which isn’t numeric. Instead this reports a value of ‘ActionFailed’ as seen in the screen snippet on the right. To fix this the data type of the Error Code attribute in the table has been updated from an INT to VARCHAR.- Updates to the database project pre and post deployments scripts now allow for completely new framework metadata database setups. Previously, publish errors were generated using the DACPAK when trying to backup the Execution Log and Error Log tables if they didn’t already exist. Now fixed and handled.

- The PowerShell script to deploy the framework pipelines has been updated to use the cmdlet

New-AzResourceinstead of the Data Factory specific versionSet-AzDataFactoryV2Pipeline. This is due to an underlying bug in the Microsoft .Net libraries whereby the Wait Activity class doesn’t currently allow the duration property to be an expression. Although the actual Azure Resource does. I’ll revert this back to the specific cmdlet once Microsoft have updated the SDK as it offers better validate on the JSON definition. Specifically here in the name space Microsoft.Azure.Management.DataFactory.Models

Other Changes

- The stored procedure

[procfwk].[SetLogActivityFailed]has a new parameter to improve the status values reported from actual operational errors. The procedure is also now called for the Infant pipeline failure path as well as the Child failure path. - MIT License, Contributing and Code of Conduct details added to the repository using GitHub community templates.

- ForEach batch count within the Child pipeline hardcoded to 40 parallel threads rather than using the default (blank) value of 20 parallel threads for Worker pipeline iterations.

- The stored procedure

[procfwk].[CheckMetadataIntegrity]has been extended to include checks for the new email alerting properties. - Azure Data Studio Notebook updates with handy code snippets to support the new email alerting options.

- More details about the metadata required has been added to the Deployment Steps markdown page.

- Glossary updated with new definitions for email alerting.

That concludes the release notes for this version of ADF.procfwk.

Please reach out if you have any questions or want help updating your implementation from the previous release.

Many thanks

2 thoughts on “ADF.procfwk v1.7 – Pipeline Email Alerting”